Check Splunk search logs, just in case

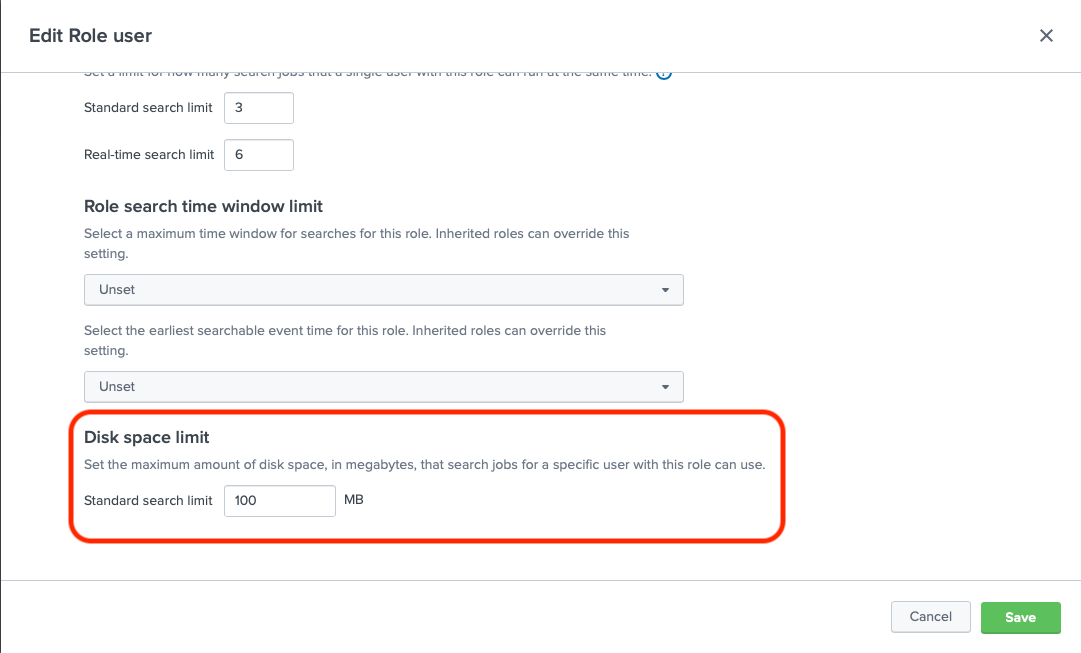

June 24, 2021We have been working on an interesting case with one of our customers. Every role in Splunk has a defined disk limit,

and by default the user role has only 100MB.

We are always cautious about how much data we bring to Splunk Dashboards and limit everything to make sure our applications can handle large clusters in our applications.

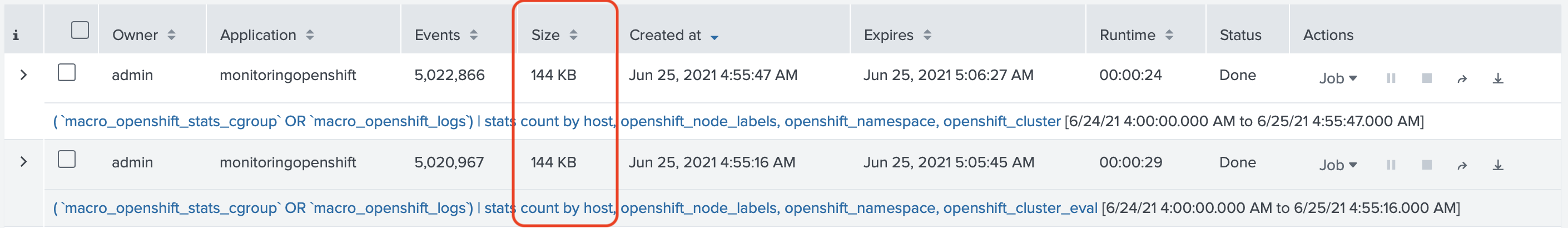

One search that was causing an issue was a search used to populate filters in various places of our "Monitoring OpenShift" application. Depends on the number of the nodes, namespaces and labels, we expect this search to return multiple thousands of values, but should not take a lot of disk space.

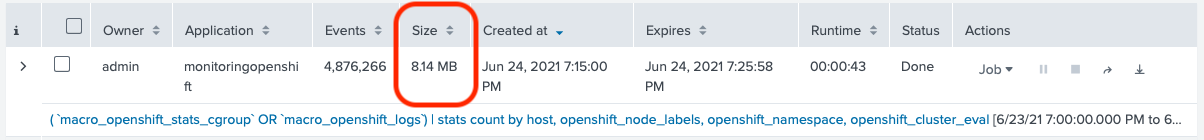

( `macro_openshift_stats_cgroup` OR `macro_openshift_logs`) | stats count by host, openshift_node_labels, openshift_namespace, openshift_cluster_eval

This search generated ~1,000 rows on our test Cluster, but took almost 10MB of Disk Space. In the customer's environment,

we were dealing with hundreds of MB on disk. Which is very unusual.

And the simple change to a simplified search would bring the disk space to just several MB, instead of hundreds.

( `macro_openshift_stats_cgroup` OR `macro_openshift_logs`) | stats count by host, openshift_node_labels, openshift_namespace, openshift_cluster

The difference between the first search, and the second one, is the usage of the openshift_cluster_eval field, which is

a Calculated field, that looks first at an indexed field openshift_cluster and if there is no value in here, will look

in openshift_node_labels for the cluster field (for backward compatibility).

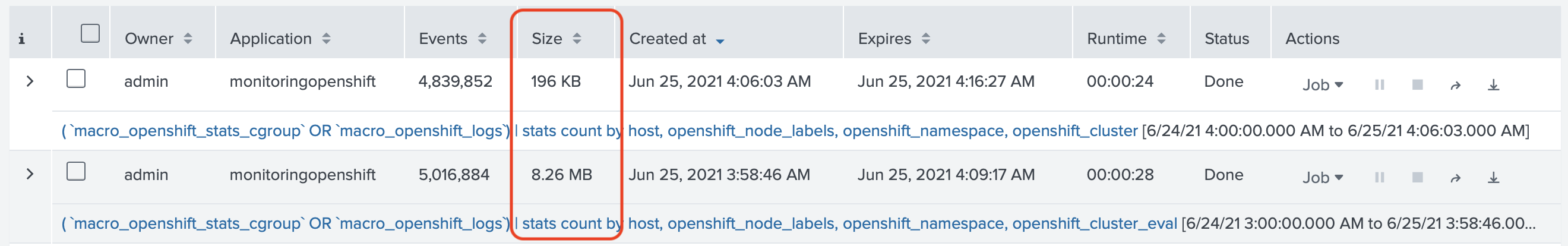

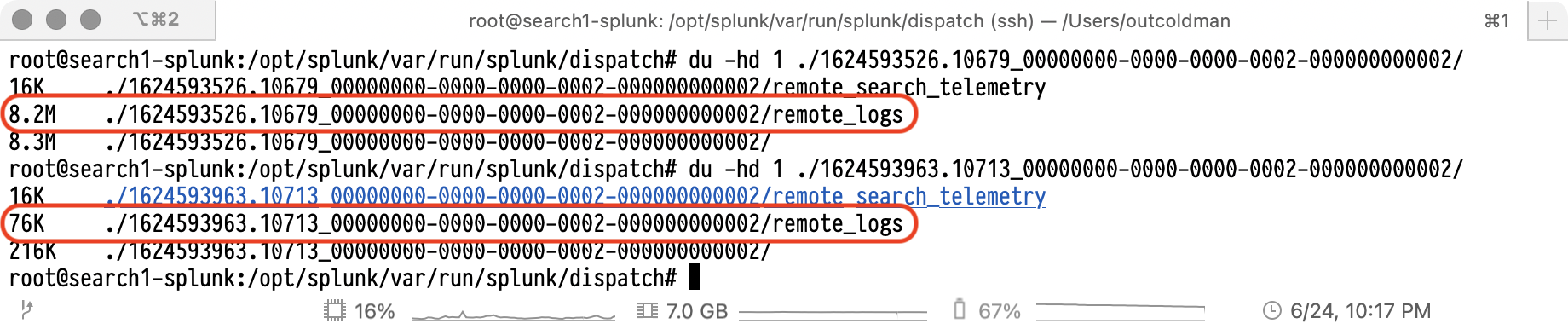

Considering that those searches return the same results, something was very odd about that. On our test cluster

one search would take 8.26MB and another 196KB (the difference is 42 times)

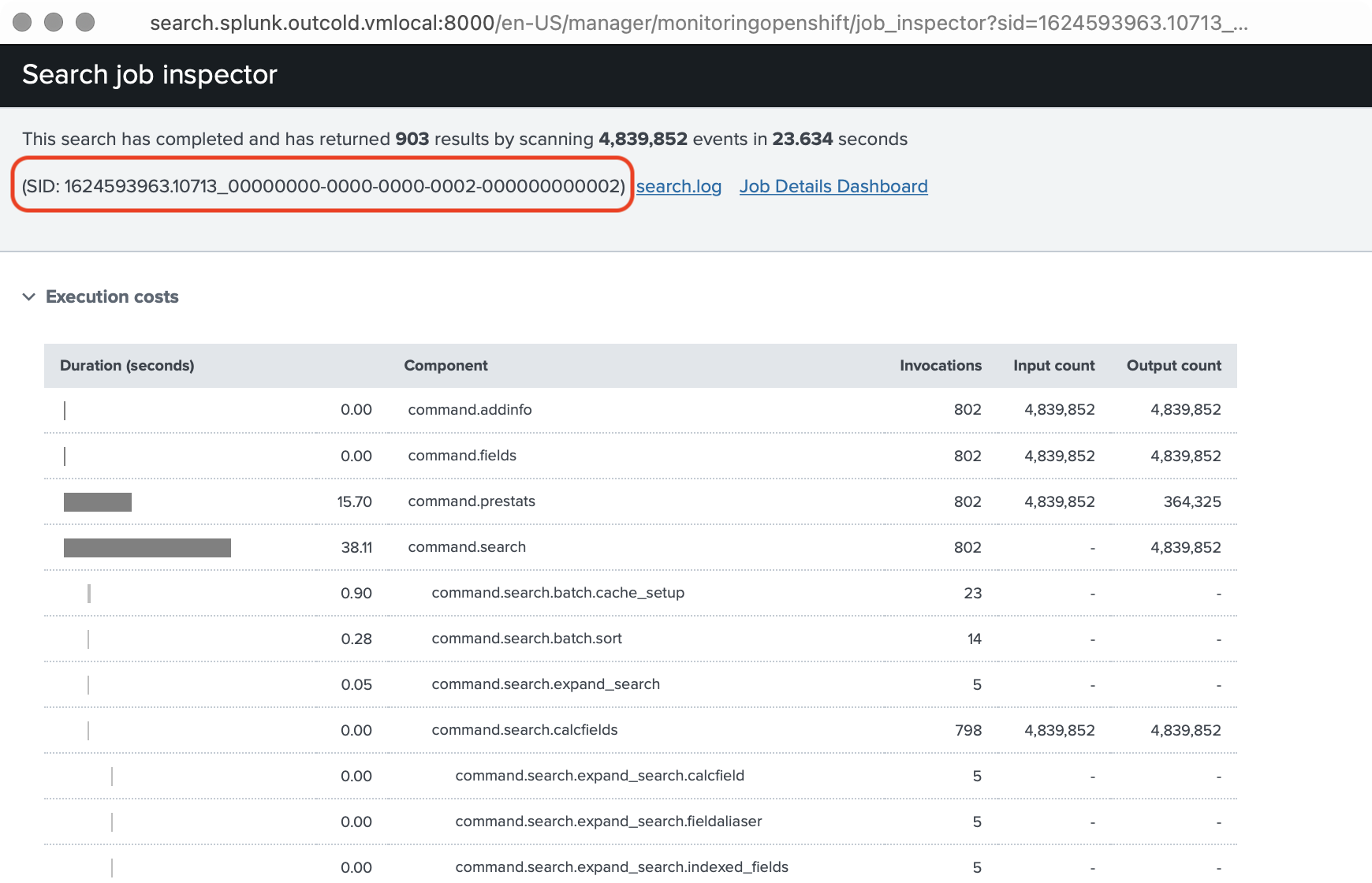

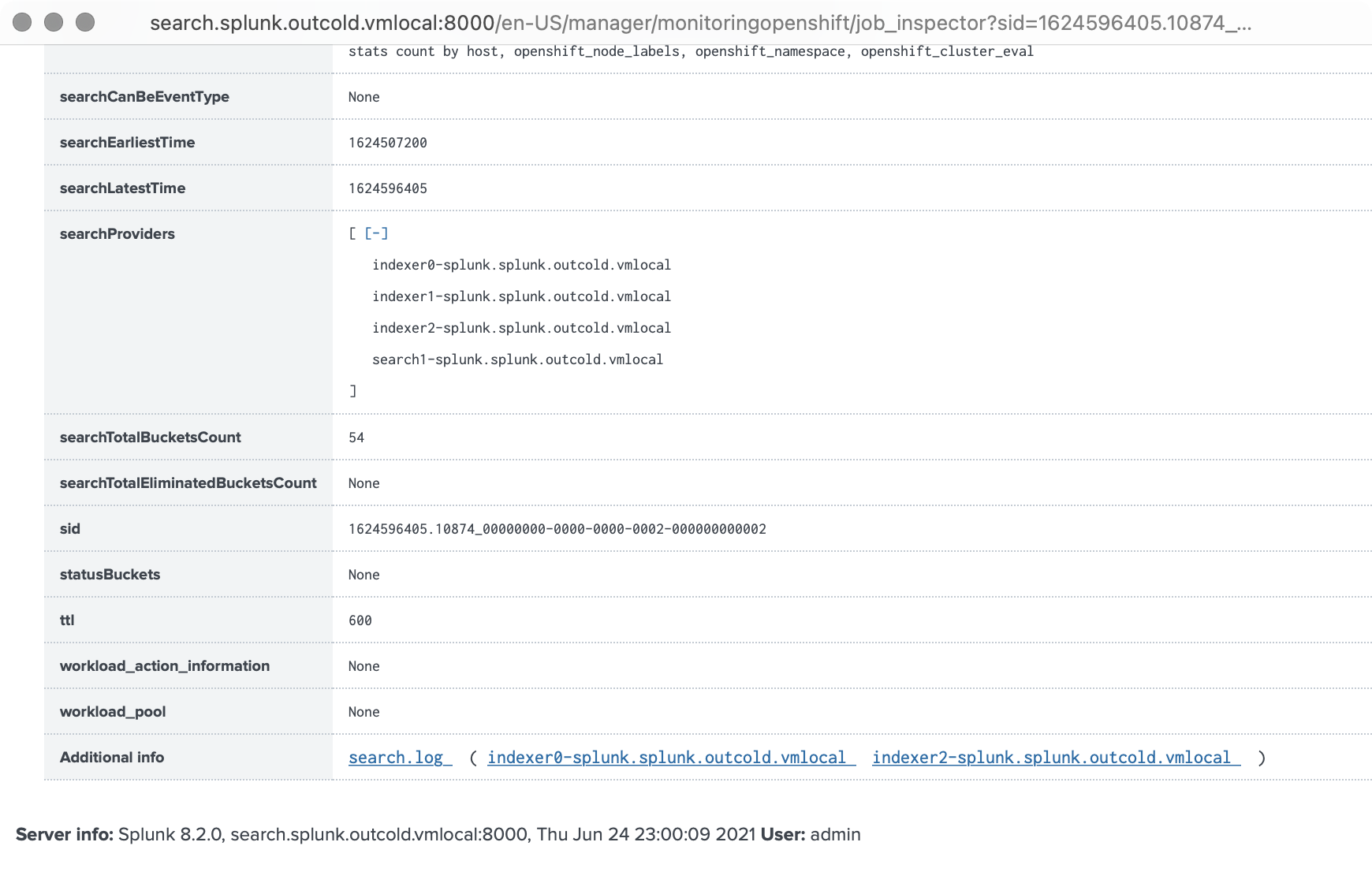

When you inspect the Job (Search), you can get find a Job ID

Using this SID, you can find a folder on the Search Head, that represents it. It will be under $SPLUNK_HOME/var/run/splunk/dispatch,

so we looked into it

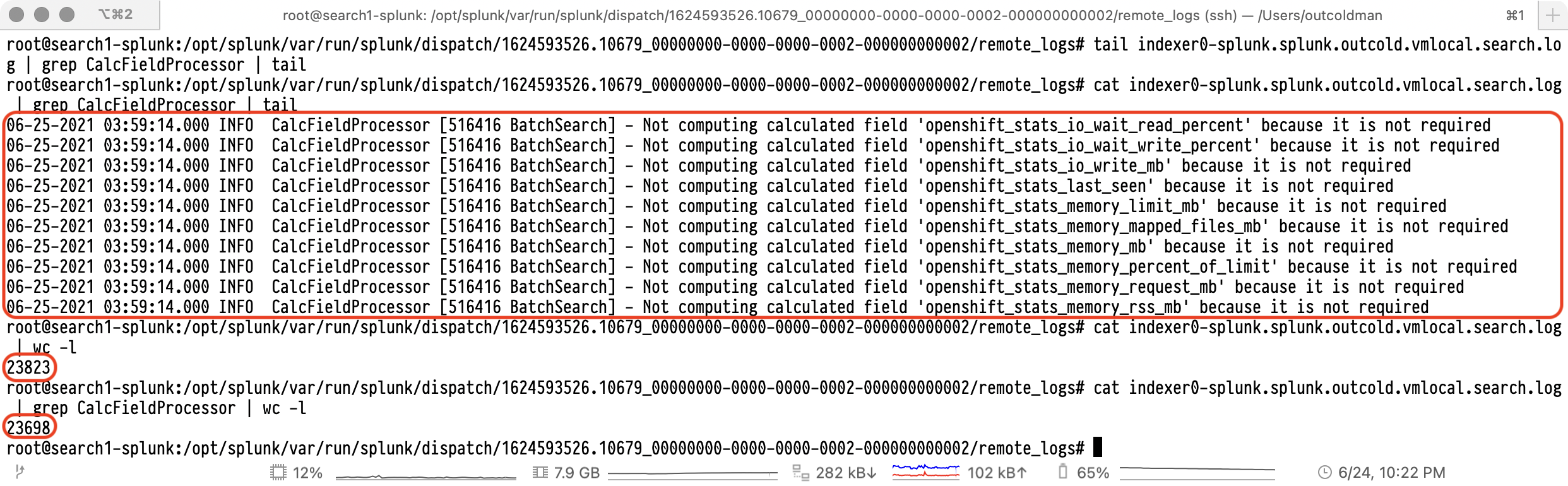

As you can see the difference between those two Searches is just the size of the remote_logs folder. After some digging

in those logs, we saw many repeating INFO messages that some of the calculated fields will be ignored, which is

expected, but we definitely did not expect that it would clog the search logs.

If you look at the Splunk documentation, you will find some information about Splunk logging, see

Troubleshooting Manual-Enable debug logging.

The logs we are looking at are part of the search process, not the Splunk daemon, so that the configurations would be in

the $SPLUNK_HOME/etc/log-searchprocess.cfg. And considering that in our case we have a Splunk Cluster with Search Head

Cluster and Indexer cluster, the searches are scheduled on Indexers, so if we want to remove those INFO messages, we need

to modify the configuration on the indexers.

If you look at the default configuration on the $SPLUNK_HOME/etc/log-searchprocess.cfg you will find

rootCategory=INFO,searchprocessAppender appender.searchprocessAppender=RollingFileAppender appender.searchprocessAppender.fileName=${SPLUNK_DISPATCH_DIR}/search.log appender.searchprocessAppender.maxFileSize=10000000 # default: 10MB (specified in bytes). appender.searchprocessAppender.maxBackupIndex=3

This means that all the not overridden categories will get by default a value INFO. The maximum size of the logs would

be 30MB (3 files of the maximum size of 10MB each). So if you have 10 Indexers, those logs could grow for each

search up to 300MB.

There are two ways to fix that. First, we can override the values for a specific category, in our case it was CalcFieldProcessor,

so we can create a file $SPLUNK_HOME/etc/log-searchprocess-local.cfg with a content

category.CalcFieldProcessor=WARN

The second option is to override the default log level for all categories with the file $SPLUNK_HOME/etc/log-searchprocess-local.cfg

and content

rootCategory=WARN,searchprocessAppender

After we applied those changes, we saw that searches are not taking so much space on the disk.

One important detail, if you are using Splunk Cloud, you would not have access to the Splunk File System, to find if you are affected by the same issue, you can run the search, go to the Job Inspector, scroll to the very bottom and expand Search Job Properties, scroll all the way down, and at the bottom of that page you can find log files from the indexers, so you can download them and see if there is anything that clogs the search logs.

If you see that those logs take a lot of space, talk to Splunk Support and ask them to make the configurations on the Indexers in your Splunk Cloud Cluster.