Forwarding logs to ElasticSearch and OpenSearch with Collectord

April 10, 2023Large teams might have different requirements for the log management system. Some teams might prefer to use ElasticSearch or OpenSearch for log management. In this version of Collectord, we have added support for sending logs to ElasticSearch and OpenSearch.

You can install the Collectord with ElasticSearch or OpenSearch support and run it in the same cluster as the Collectord for Splunk. In that case, you can configure the Collectord to send logs to both Splunk and ElasticSearch or OpenSearch.

Collectord version 5.20 and later supports sending logs to ElasticSearch and OpenSearch.

Our installation instructions for ElasticSearch and OpenSearch provide dedicated configuration files for ElasticSearch and OpenSearch. The main difference is pre-configured mappings and templates for ElasticSearch and OpenSearch.

You can find installation instructions on our website: Forwarding logs to ElasticSearch and OpenSearch with Collectord

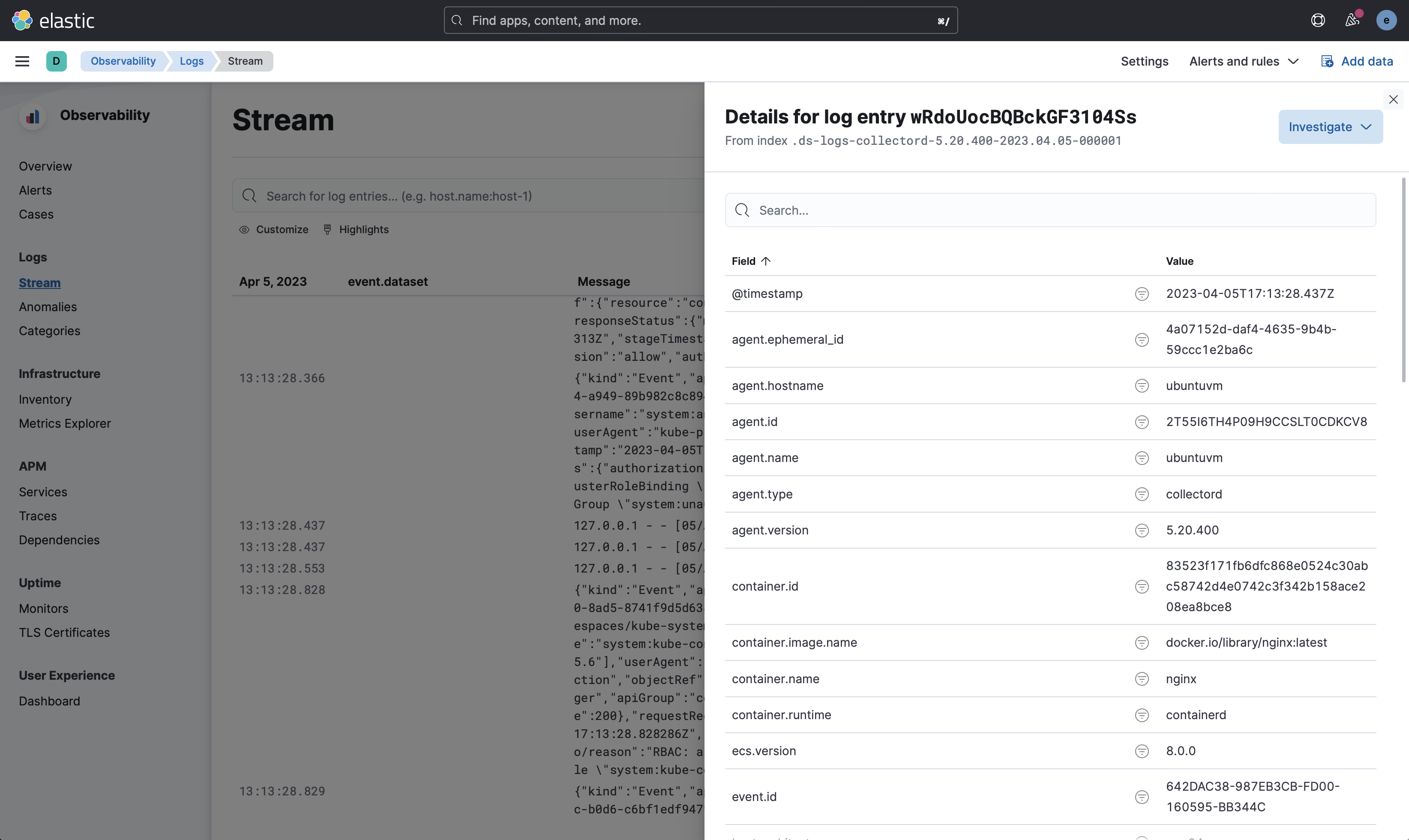

Preview of the ElasticSearch Observability Dashboard with logs ingested by Collectord

Collectord ingests logs with the Elastic Common Schema (ECS) format.

The following screenshot shows the ElasticSearch Observability Dashboard with logs ingested by Collectord.

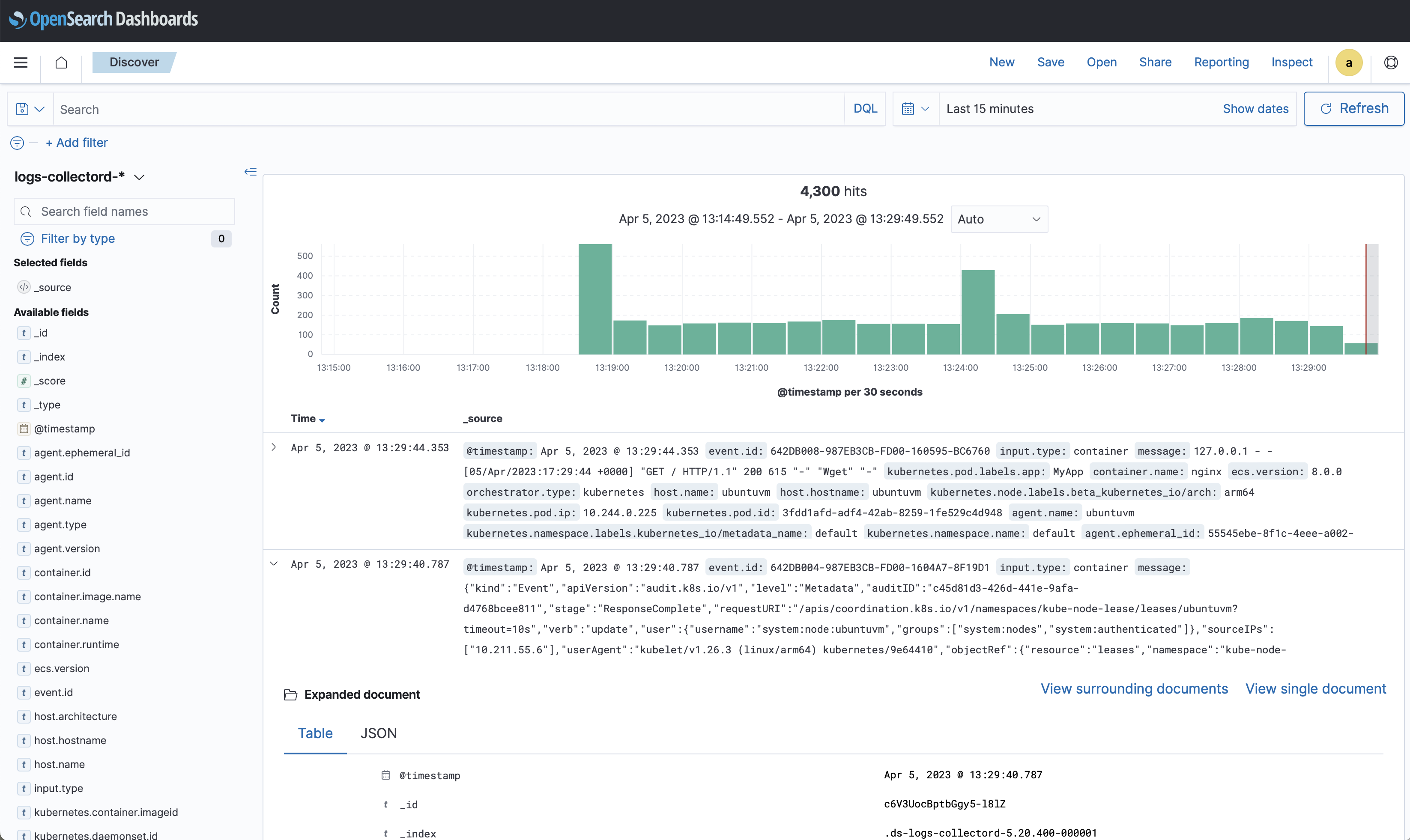

Preview of the OpenSearch Dashboards with logs ingested by Collectord

The following screenshot shows the OpenSearch Dashboards with logs ingested by Collectord.

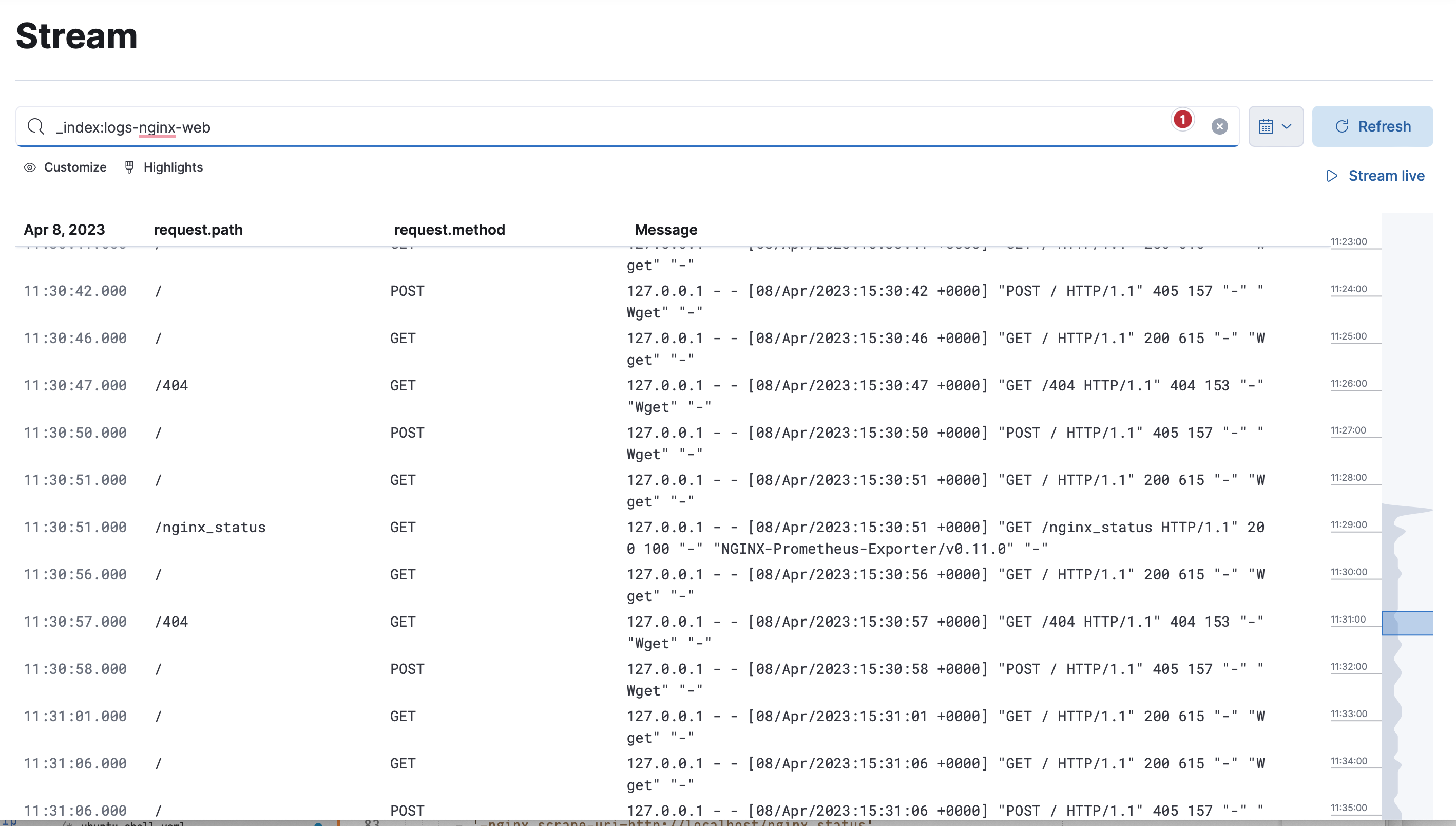

Extracting fields from the logs and redirecting to custom data streams

With Collectord annotations you can configure field extractions and redirect logs to a different data stream.

In our example, we have configured an nginx pod running.

First, considering that we will extract some additional fields, we will create a new data stream called logs-nginx-web.

To do that first we will download the default index templated created by Collectord and add additional fields.

curl -k -u elastic:elastic https://localhost:9200/_index_template/logs-collectord-5.20.400 | jq '.index_templates[].index_template' > default.json

In the default.json file we will change the index_patterns to logs-nginx-web and add additional fields to the mappings.properties section.

Then we will add additional fields to the mappings.properties section.

"request": {

"properties": {

"remote_addr": {"type": "ip"},

"remote_user": {"ignore_above": 1024, "type": "keyword"},

"method": {"ignore_above": 1024, "type": "keyword"},

"path": {"ignore_above": 1024, "type": "keyword"},

"http_referer": {"ignore_above": 1024, "type": "keyword"},

"http_user_agent": {"ignore_above": 1024, "type": "keyword"}

}

},

"response": {

"properties": {

"status": {"type": "long"},

"body_bytes": {"type": "long"}

}

}

For the Pod we will add the following annotations:

Important details that

__is used to create nested fields in ElasticSearch, so therequest__remote_addrwill be converted torequest.remote_addrin ElasticSearch.

apiVersion: v1 kind: Pod metadata: name: nginx-pod annotations: elasticsearch.collectord.io/stdout-logs-extraction: '^((?P<request__remote_addr>[\d.]+)\s+(?P<request__remote_user>-|\w+) -\s+\[(?P<timestamp>[^\]]+)\]\s+"(?P<request__method>[^\s]+)\s(?P<request__path>[^\s]+)\s(?P<request__type>[^"]+)"\s+(?P<response__status>\d+)\s+(?P<response__body_bytes>\d+)\s+"(?P<request__http_referer>[^"]*)"\s+"(?P<request__http_user_agent>[^"]*)" "-")$' elasticsearch.collectord.io/stdout-logs-timestampfield: timestamp elasticsearch.collectord.io/stdout-logs-timestampformat: '02/Jan/2006:15:04:05 -0700' elasticsearch.collectord.io/stdout-logs-index: 'logs-nginx-web' # ...

After that we can review the logs in the ElasticSearch Dashboards.

In case if you define a mapping incorrectly, the events that could not be indexed will be redirected to the data stream defined

under [output.elasticsearch] with field dataStreamFailedEvents and you will see WARN in Collectord logs similar to

WARN 2023/04/08 11:53:16.679396 outcoldsolutions.com/collectord/pipeline/output/elasticsearch/output.go:322: thread=1 datastream="logs-nginx-broken" first error from bulk insert: item create failed with status 400 (failed to parse field [request.remote_addr] of type [long] in document with id 'iwySYYcB8kxjWZpbYyHp'. Preview of field's value: '127.0.0.1') WARN 2023/04/08 11:53:16.679426 outcoldsolutions.com/collectord/pipeline/output/elasticsearch/output.go:333: thread=1 datastream="logs-nginx-broken" response contains errors, 3 events failed to be indexed, posting to logs-collectord-failed-5.20.400

Forwarding logs from Persistent Volumes

Collectord can forward logs from Persistent Volumes without any additional deployments on the cluster.

To do that you can just add a simple annotation to the Pod

elasticsearch.collectord.io/volume.1-logs-name: 'logs' where logs is the name of the volume. In the example

below we also use some existing features of Collectord to extract fields from the logs, especially the proper timestamp.

Additionally we use some new features of Collectord to match files by a glob pattern, where we use the `` variable, and store the acknowledgement database on the Persistent Volume, so when it is getting attached to other host, the logs will be forwarded from the last acknowledged position.

apiVersion: v1 kind: Pod metadata: name: postgres-pod0 annotations: elasticsearch.collectord.io/volume.1-logs-name: 'logs' elasticsearch.collectord.io/volume.1-logs-glob: '/*.log' elasticsearch.collectord.io/volume.1-logs-extraction: '^(?P<timestamp>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}\.\d{3} [^\s]+) (.+)$' elasticsearch.collectord.io/volume.1-logs-timestampfield: 'timestamp' elasticsearch.collectord.io/volume.1-logs-timestampformat: '2006-01-02 15:04:05.000 MST' elasticsearch.collectord.io/volume.1-logs-timestamplocation: 'Europe/Oslo' elasticsearch.collectord.io/volume.1-logs-onvolumedatabase: 'true' spec: containers: - name: postgres image: postgres env: - name: POSTGRES_HOST_AUTH_METHOD value: trust command: - docker-entrypoint.sh args: - postgres - -c - logging_collector=on - -c - log_min_duration_statement=0 - -c - log_directory=/var/log/postgresql/postgres-pod0/ - -c - log_min_messages=INFO - -c - log_rotation_age=1d - -c - log_rotation_size=10MB volumeMounts: - name: data mountPath: /var/lib/postgresql/data - name: logs mountPath: /var/log/postgresql/ volumes: - name: data emptyDir: {} - name: logs persistentVolumeClaim: claimName: myclaim0