Complete guide for forwarding application logs from Kubernetes and OpenShift environments to Splunk

January 3, 2021We have helped many of our customers forward various logs from their Kubernetes and OpenShift environment to Splunk. We have learned a lot, and that helped us to build a lot of features in Collectord. And we do understand that some of the features could be hard to discover, so we would like to share our guide on how to set up proper forwarding of the application logs to Splunk.

In our documentation, we have an example of how to easily forward application logs from PostgreSQL database running inside of the container. This time we will look at the JIRA application.

We assume that you already have Splunk and Kubernetes (or OpenShift) configured and installed our solution for forwarding logs and metrics (if not, it takes 5 minutes, and you can request a trial license with our automated forms, please follow our documentation).

And one more thing, no side-car containers are required! Collectord is the container-native solution for forwarding logs from Docker, Kubernetes, and OpenShift environments.

This guide looks pretty long. The reason for that, because we are going into a lot of details and picked one of the most complicated examples.

1. Defining the logs

The first step is simple, let's find the logs that we want to forward. As we mentioned above, we will use a JIRA application running in the container. For simplicity, we will define it as a single Pod

apiVersion: v1 kind: Pod metadata: name: jira spec: containers: - name: jira image: atlassian/jira-software:8.14 volumeMounts: - name: data mountPath: /var/atlassian/application-data/jira volumes: - name: data emptyDir: {}

Let's open a shell for this application and look at how the log files look like.

user@host# kubectl exec -it jira -- bash root@jira:/var/atlassian/application-data/jira# cd log root@jira:/var/atlassian/application-data/jira/log# ls -alh total 36K drwxr-x--- 2 jira jira 4.0K Dec 15 21:44 . drwxr-xr-x 9 jira jira 4.0K Dec 15 21:44 .. -rw-r----- 1 jira jira 27K Dec 15 21:44 atlassian-jira.log

And we will tail atlassian-jira.log to see how the logs are structured

2020-12-15 21:44:25,771+0000 JIRA-Bootstrap INFO [c.a.j.config.database.DatabaseConfigurationManagerImpl] The database is not yet configured. Enqueuing Database Checklist Launcher on post-database-configured-but-pre-database-activated queue

2020-12-15 21:44:25,771+0000 JIRA-Bootstrap INFO [c.a.j.config.database.DatabaseConfigurationManagerImpl] The database is not yet configured. Enqueuing Post database-configuration launchers on post-database-activated queue

2020-12-15 21:44:25,776+0000 JIRA-Bootstrap INFO [c.a.jira.startup.LauncherContextListener] Startup is complete. Jira is ready to serve.

2020-12-15 21:44:25,778+0000 JIRA-Bootstrap INFO [c.a.jira.startup.LauncherContextListener] Memory Usage:

---------------------------------------------------------------------------------

Heap memory : Used: 102 MiB. Committed: 371 MiB. Max: 1980 MiB

Non-heap memory : Used: 71 MiB. Committed: 89 MiB. Max: 1536 MiB

---------------------------------------------------------------------------------

TOTAL : Used: 173 MiB. Committed: 460 MiB. Max: 3516 MiB

---------------------------------------------------------------------------------

2. Telling Collectord to forward logs

The best scenario is when we can define a dedicated mount just for the path where the logs will be located. That will be the most

performant way of setting up the forwarding pipeline. But considering that JIRA recommends mounting the data volume for

all the data at /var/atlassian/application-data/jira we can use that as well.

You can tell collectord to match the logs by the glob or match(regexp, we

like to use regex101.com for testing, make sure to switch to Golang flavor). Glob is the easy

and more performant way for matching logs, as we can split the glob pattern into the parts of path, and be able to know how

deep we should go inside of the volume to match the logs. With match, it is a bit more complicated as .* can match

any symbol in the path, including the path separator. So every time when you are configuring the match with regexp, make sure

that your volume does not have a really deep structure of folders inside.

We always recommend starting with the glob. If you specify both glob and match patterns, only match will be used.

The data volume is mounted at /var/atlassian/application-data/jira/log.

We can test the glob pattern by executing the shell in the container and staying in the path of the mounted volume

try to execute the glob pattern with ls

root@jira:/var/atlassian/application-data/jira# ls log/*.log* log/atlassian-jira.log

Ok, so now we know the glob pattern log/*.log*. We are going to annotate the Pod, these annotations will tell Collectord

to look at the data volume recursively and try to find the logs that match log/*.log*.

kubectl annotate pod jira \ collectord.io/volume.1-logs-name=data \ collectord.io/volume.1-logs-recursive=true \ collectord.io/volume.1-logs-glob='log/*.log*'

After doing that you can check the logs on the Collectord pod to see if the new logs were discovered, you should see something similar to

INFO 2020/12/15 21:59:29.359039 outcoldsolutions.com/collectord/pipeline/input/file/dir/watcher.go:76: watching /rootfs/var/lib/kubelet/pods/007be5c2-cd20-4d5e-8044-5e2399e28764/volumes/kubernetes.io~empty-dir/data/(glob = log/*.log*, match = ) INFO 2020/12/15 21:59:29.359651 outcoldsolutions.com/collectord/pipeline/input/file/dir/watcher.go:178: data - added file /rootfs/var/lib/kubelet/pods/007be5c2-cd20-4d5e-8044-5e2399e28764/volumes/kubernetes.io~empty-dir/data/log/atlassian-jira.log

If you see only 1st line, that means that Collectord recognized the logs, but could not find any logs matching the pattern (also possible the configuration is incorrect, and maybe you need to run the documentation) steps to see if Collectord can see the volumes).

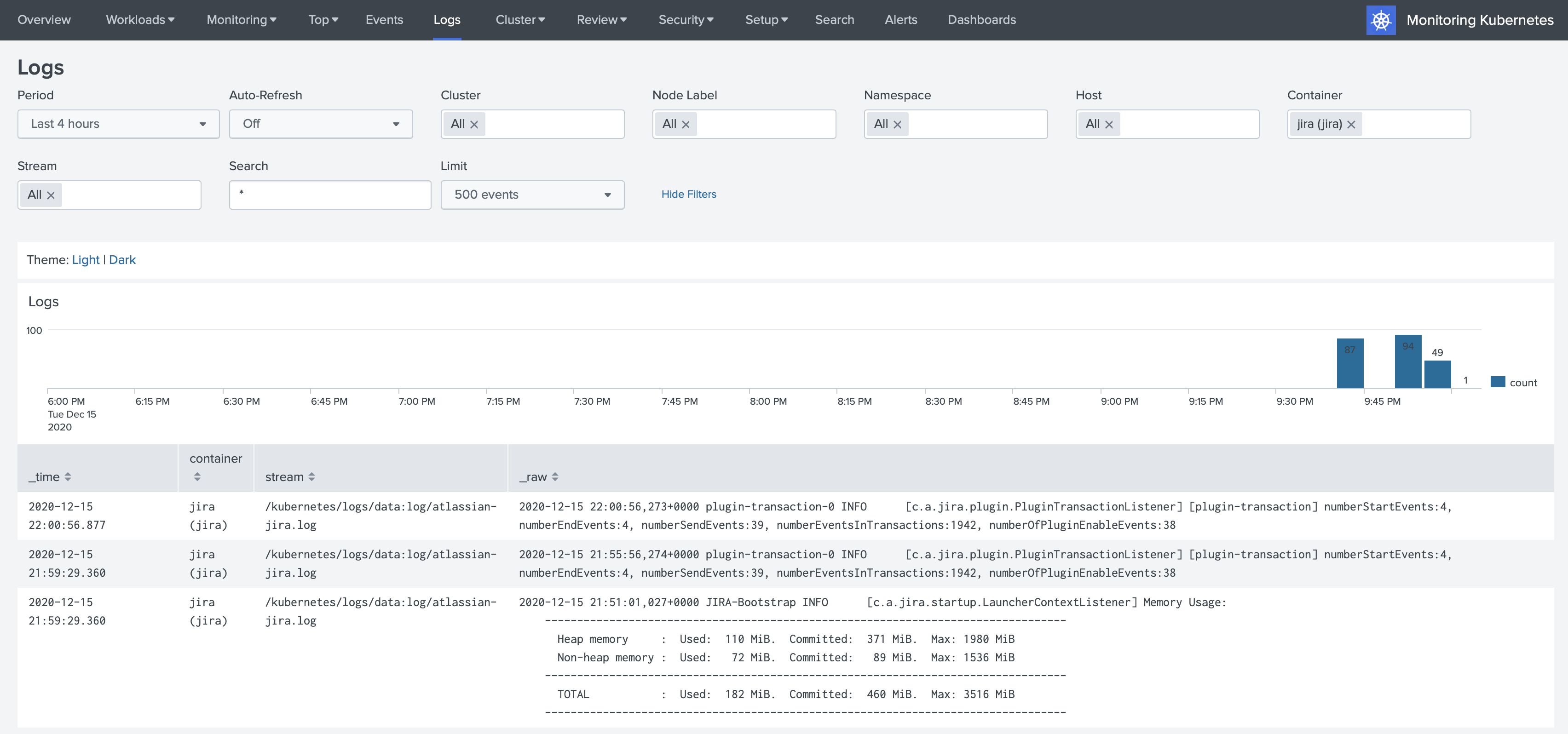

At this point we can go to Splunk and discover the logs in the Monitoring Kubernetes application.

3. Multiline events

By default Collectord merges all the lines starting with spaces with the previous lines. All the default configurations

are under [input.app_logs] in the ConfigMap that you deploy with Collectord. Let's cover the most important of them.

-

disabled = false- the feature of discovering application logs is enabled by default. Obviously if there are no annotations telling Collectord to pickup the logs from Containers, nothing is going to be forwarded. -

walkingInterval = 5s- how often Collectord will walk the path and see if there are new files in matching the pattern. -

glob = *.log*- default glob pattern, in our example above we override it withlog/*.log* -

type = kubernetes_logs- default source type for the logs forwarded from containers -

eventPatternRegex = ^[^\s]- that is default pattern how the new event should start (should not start with a space character), that is how we see that some of the logs are already forwarded as multiline events. -

eventPatternMaxInterval = 100ms- we expect that every line in the message should be written to the file under 100ms. When we see that there is a larger interval between the lines, we assume those are different messages. -

eventPatternMaxWait = 1s- the maximum amount of time when we are going to wait for new lines in the pipeline. We never want to block the pipeline, so we will wait a maximum of1safter the first line of the event before we will decide to forward the event as is to Splunk.

The default pattern for matching multiline events work great, but considering that we know exactly how to identify the

new event by looking on the pattern of the messages, we can define a unique pattern for this pod with regexp

^\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2},\d{3}\+[^\s]+, where we are telling Collectord that every event should start

from the timestamp like 2020-12-15 21:44:25,771+0000.

Let's add one more annotation

kubectl annotate pod jira \ collectord.io/volume.1-logs-eventpattern='^\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2},\d{3}\+[^\s]+'

4. Extracting time

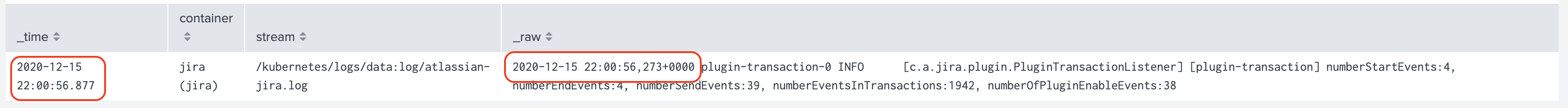

If you look at the events forwarded to Splunk you will see that the timestamp of the event in Splunk does not match the timestamp of the event in the log line. Also, including the timestamp in the logs adds additional licensing cost for Splunk as well.

For the container logs we recommend just completly removing the timestamp in the log line, as container runtime provides an accurate timestamp for every log line, see Timestamps in container logs.

We will try to extract the timestamp from the log lines and forward it as a correct timestamp of the event. In most cases it is way easier to do, but with the current format in JIRA it is a little bit tricker, so we will need to include some magic.

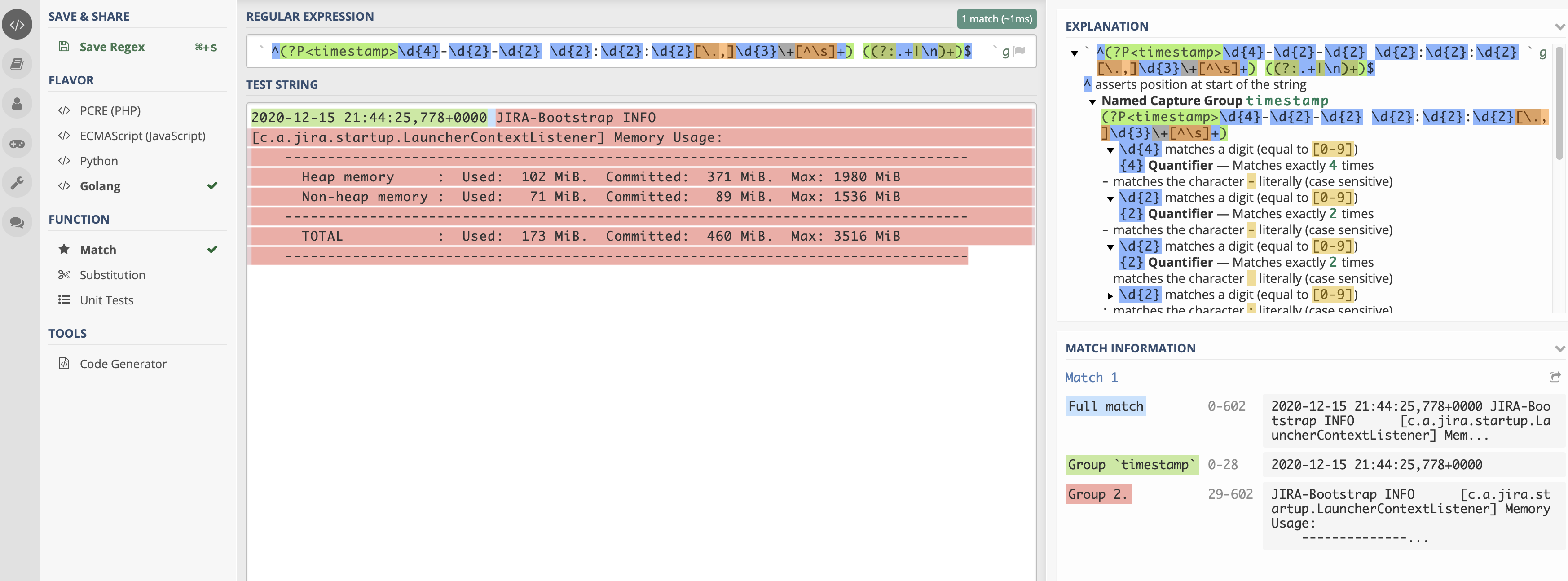

First we need to extract the timestamp as a separate field, for this we will use already mentioned tool regex101.com.

The regexp that I've build is ^(?P<timestamp>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}[\.,]\d{3}\+[^\s]+) ((?:.+|\n)+)$,

on the Match Information tab you can see that the whole event is matching (could be tricky with multiline events),

the timestamp field is extracted, and the rest is unnamed group. The last unnamed group is getting forwarded to Splunk

with Collectord as a message field. A few notes about this regexp

-

In the middle of the timestamp I don't match the subseconds with just a

,, but instead matching it with dot or comma[\.,]. I will show you below the real reason for that, we will need to make a workaround, as golang cannot parse timestamp where subseconds are separated by comma, not a dot. -

(?:YOUR_REGEXP)always use a not-capturing pattern, when you don't want to name this pattern, but need to use parentless to define the whole regexp pattern. That way you are not telling Collectord to look at this as another field.

Collectord is written in Go language, so we use Go Parse function from time package to parse the time. You can always play with the golang playground, and we prepared a template for you to try to prepare your perfect parsing layout for timestamps.

package main import ( "fmt" "time" ) func main() { t, err := time.Parse("2006-01-02 15:04:05,000-0700", "2020-12-15 21:44:25,771+0000") if err != nil { panic(err) } fmt.Println(t.String()) }

If you will try to run this code, you will see an error

panic: parsing time "2020-12-15 21:44:25,771+0000" as "2006-01-02 15:04:05,000-0700": cannot parse "771+0000" as ",000"

As I mentioned above, the reason for that, Go language cannot recognize milliseconds after the comma. With the Collectord

we can replace the comma with the dot, and then our timestamp layout will be 2006-01-02 15:04:05.000-0700.

First these are annotations that will help us to replace the comma with the dot.

kubectl annotate pod jira \ collectord.io/volume.1-logs-replace.fixtime-search='^(?P<timestamp_start>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}),(?P<timestamp_end>\d{3}\+[^\s]+)' \ collectord.io/volume.1-logs-replace.fixtime-val='${timestamp_start}.${timestamp_end}'

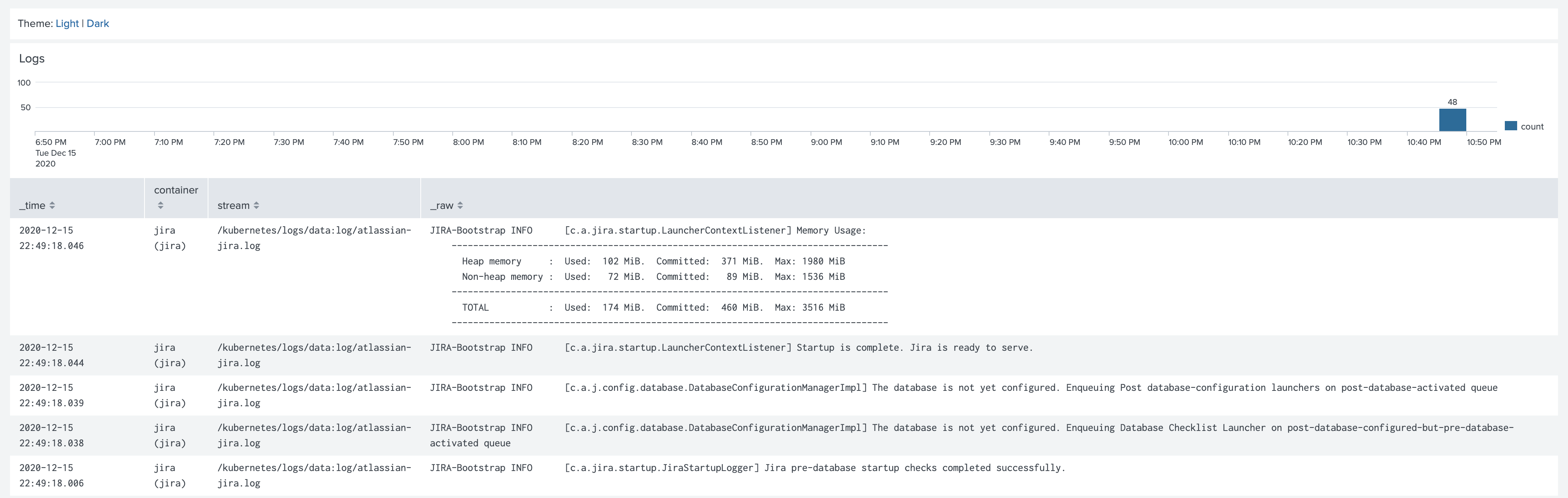

After that we can apply annotations to extract the timestamp as a field and parse it as a timestamp field for events

kubectl annotate pod jira \ collectord.io/volume.1-logs-extraction='^(?P<timestamp>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}[\.,]\d{3}\+[^\s]+) ((?:.+|\n)+)$' \ collectord.io/volume.1-logs-timestampfield='timestamp' \ collectord.io/volume.1-logs-timestampformat='2006-01-02 15:04:05.000-0700'

The complete example

After applying all the annotations, our pod definition should look similar to the example below.

apiVersion: v1 kind: Pod metadata: name: jira annotations: collectord.io/volume.1-logs-name: 'data' collectord.io/volume.1-logs-recursive: 'true' collectord.io/volume.1-logs-glob: 'log/*.log*' collectord.io/volume.1-logs-eventpattern: '^\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2},\d{3}\+[^\s]+' collectord.io/volume.1-logs-replace.fixtime-search: '^(?P<timestamp_start>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}),(?P<timestamp_end>\d{3}\+[^\s]+)' collectord.io/volume.1-logs-replace.fixtime-val: '${timestamp_start}.${timestamp_end}' collectord.io/volume.1-logs-extraction: '^(?P<timestamp>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}[\.,]\d{3}\+[^\s]+) ((?:.+|\n)+)$' collectord.io/volume.1-logs-timestampfield: 'timestamp' collectord.io/volume.1-logs-timestampformat: '2006-01-02 15:04:05.000-0700' spec: containers: - name: jira image: atlassian/jira-software:8.14 volumeMounts: - name: data mountPath: /var/atlassian/application-data/jira volumes: - name: data emptyDir: {}

The logs in Splunk should be well formatted

Links

Read more about available annotations that control the forwarding pipeline in the links below