Monitoring Docker, OpenShift and Kubernetes - Version 5.9 - Support for multiple Splunk Clusters, streaming API Objects

With this release we improved capabilities for streaming data to multiple Splunk Clusters and support for deploying multiple Collectord instances on the same node (in case you need to stream the same data to multiple clusters), and added a new capability to stream objects and changes from the API Server.

This release also includes a journald input fix. We have found that in the previous version Collectord could hold the file descriptors of the rotated journald files. If you are using Journald input (enabled by default), please upgrade.

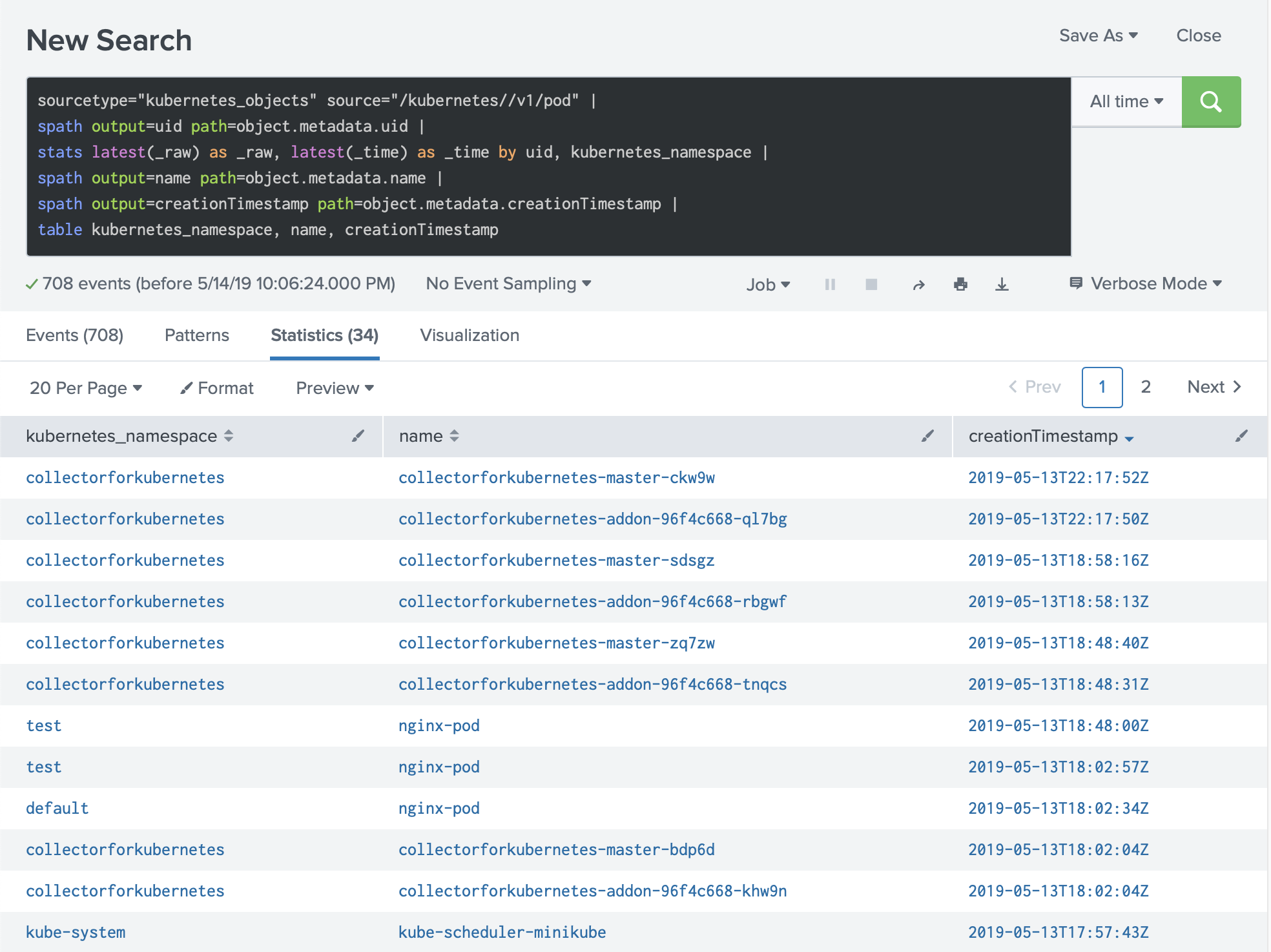

Streaming API Objects

Starting with version 5.9 you can stream all changes from the Kubernetes and Docker API servers to Splunk. That is useful if you

want to monitor all changes for the Workloads or ConfigMaps in Splunk. Or you want to recreate Kubernetes Dashboard

experience in Splunk. With the default configuration we don’t forward any objects from the API Server except events.

Please follow updated documentation to setup streaming of the Kubernetes and Docker API Objects to Splunk.

- Monitoring OpenShift v5 - Streaming OpenShift Objects from the API Server

- Monitoring Kubernetes v5 - Streaming Kubernetes Objects from the API Server

- Monitoring Docker v5 - Streaming Docker Objects from the API

Support for multiple Splunk Clusters or Splunk Tokens

In case you want to use multiple HTTP Event Collector Tokens, or forward data from the namespaces to different Splunk Clusters, you can define more than one Splunk Output in the configuration.

In the default ConfigMap (or configuration for Docker) we include only the default Splunk output under the stanza [output.splunk],

you can define additional outputs and name them, like

[output.splunk::prod1]

url = https://prod1.hec.example.com:8088/services/collector/event/1.0

token = AF420832-F61B-480F-86B3-CCB5D37F7D0D

See the details on how to configure the outputs

- Monitoring OpenShift v5 - Configurations for Splunk HTTP Event Collector - Support for multiple Splunk clusters

- Monitoring Kubernetes v5 - Configurations for Splunk HTTP Event Collector - Support for multiple Splunk clusters

- Monitoring Docker v5 - Configurations for Splunk HTTP Event Collector - Support for multiple Splunk clusters

Using the annotations you can override the default Splunk output and define the Splunk Cluster you want to redirect the data from the namespace or pod

or container. In the example below, we are using the configuration to forward all the data from a specific namespace to the

Splunk output prod1

apiVersion: v1

kind: Namespace

metadata:

name: prod1-namespace

annotations:

collectord.io/output: 'splunk::prod1'

- Monitoring OpenShift v5 - Annotations - Change output destination

- Monitoring Kubernetes v5 - Annotations - Change output destination

- Monitoring Docker v5 - Annotations - Change output destination

Improved support for multiple Collectord deployments

If you need to stream the same data to multiple Splunk deployments you can easily deploy more than one Collectord on one node. Some configuration changes are required in order to ensure that the deployments will not conflict with each other, primarily about the location of the database that stores acknowledgement data.

Before 5.9, annotations would be applied to all the Collectord deployments. From version 5.9

you can define the subdomains for the annotations under [general] with the key annotationsSubdomain, for example

[general]

annotationsSubdomain = prod1

After that for this specific deployment you can use annotations as prod1.collectord.io/index=foo.

- Monitoring OpenShift v5 - Annotations

- Monitoring Kubernetes v5 - Annotations

- Monitoring Docker v5 - Annotations

Links

You can find more information about other minor updates by following the links below.

Release notes

- Monitoring OpenShift - Release notes

- Monitoring Kubernetes - Release notes

- Monitoring Docker - Release notes