Monitoring Tectonic in Splunk (Enterprise Kubernetes by CoreOS)

tl;dr;

To install the Monitoring Kubernetes solution on Tectonic

- Follow the instructions on Monitoring Kubernetes to install the collector and application.

- Install DaemonSet with rsyslog, which forwards journald logs to

/var/log/syslog.

kubectl apply -f https://raw.githubusercontent.com/outcoldsolutions/docker-journald-to-syslog/master/journald-to-syslog.yaml

Building Journald-To-Syslog image

Tectonic is the secure, automated, and hybrid enterprise Kubernetes platform. Built by CoreOS.

Our solutions for Monitoring Kubernetes work for most distributions of Kubernetes. And they work for Tectonic as well. They just require a small help to get the host logs.

Tectonic is using CoreOS as the host Linux for containers. CoreOS is a very lightweight Linux that does not provide a package manager and requires you to install additional software with containers.

CoreOS by default writes all host logs to journald, which stores them in binary format. In most distributions

like RHEL, we recommend installing rsyslog which automatically configures streaming of all logs from journald to

/var/log/. For CoreOS, we should do the same. The only difference is we will need to install it inside the container.

For the image outcoldsolutions/journald-to-syslog, I chose

debian:stretch as a base image for a simple reason. It has the right version of journalctl, built with the right set of libraries.

If you ssh to one of your CoreOS boxes, you will find out that journald was built with +LZ4 and it is

probably using it as the default.

$ journalctl --version

systemd 233

+PAM +AUDIT +SELINUX +IMA -APPARMOR +SMACK -SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT -GNUTLS -ACL +XZ +LZ4 +SECCOMP +BLKID -ELFUTILS +KMOD -IDN default-hierarchy=legacy

For example, ubuntu does not have +LZ4

$ docker run --rm ubuntu journalctl --version

systemd 229

+PAM +AUDIT +SELINUX +IMA +APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ -LZ4 +SECCOMP +BLKID +ELFUTILS +KMOD -IDN

But debian has it

$ docker run --rm debian bash -c 'apt-get update && apt-get install -y systemd && journalctl --version'

systemd 232

+PAM +AUDIT +SELINUX +IMA +APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 +SECCOMP +BLKID +ELFUTILS +KMOD +IDN

If you decide to build your image to forward logs from journald to syslog, make sure to check the compatibility.

You can test it by running journalctl -f over the journal files from your host Linux

docker run --rm -it \

-v /var/log/journal/:/var/log/journal/:ro \

-v /etc/machine-id:/etc/machine-id:ro \

debian \

bash -c 'apt-get update && apt-get install -y systemd && journalctl -f'

Inside Journald-To-Syslog image

rsyslog

I used rsyslog with imjournal to build

this image. As an alternative, you can use syslog-ng with the systemd-journal

source.

The configuration I use for rsyslog:

$ActionFileDefaultTemplate RSYSLOG_TraditionalFileFormat

module(load="imjournal" StateFile="/rootfs/var/log/syslog.statefile")

$outchannel log_rotation, /rootfs/var/log/syslog, 10485760, /usr/bin/logrotate.sh

*.* :omfile:$log_rotation

Where:

- This is just a default format for the syslog file output.

- Load journal logs and use the state file

/rootfs/var/log/syslog.statefilein case this container restarts. - Create the output channel as a

/rootfs/var/log/syslogfile, and call/usr/bin/logrotate.shif the file is larger than10Mb(10485760bytes). - Stream all data (in our case, only journal logs) to the created channel from (3).

log rotation

A good thing about rsyslog is that it will take care of when to call log rotation. We just need to configure logrotate.

For that, I created a bash script:

#!/bin/bash

/usr/sbin/logrotate /etc/journald-to-syslog/logrotate.conf

And a default configuration to keep five rotated files and rotate when the file size is more than 10M (should be in sync

with the size we used in rsyslog.conf).

/rootfs/var/log/syslog {

rotate 5

create

size 10M

}

entrypoint.sh

To make sure that rsyslog will use the right hostname and not the one autogenerated by Docker, I also created an

entrypoint.sh which can set the right hostname before it starts rsyslogd in the foreground.

if [[ -z "${KUBERNETES_NODENAME}" ]]; then

hostname $(cat /etc/hostname)

else

hostname "${KUBERNETES_NODENAME}"

fi

/usr/sbin/rsyslogd -f /etc/journald-to-syslog/rsyslog.conf -n

K8S configuration: journald-to-syslog.yaml

The last step is to schedule the journald-to-syslog container. We will schedule it on every Kubernetes node with the

DaemonSet workload.

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: journald-to-syslog

labels:

app: journald-to-syslog

spec:

updateStrategy:

type: RollingUpdate

selector:

matchLabels:

daemon: journald-to-syslog

template:

metadata:

name: journald-to-syslog

labels:

daemon: journald-to-syslog

spec:

tolerations:

- operator: "Exists"

effect: "NoSchedule"

- operator: "Exists"

effect: "NoExecute"

containers:

- name: journald-to-syslog

image: outcoldsolutions/journald-to-syslog

securityContext:

privileged: true

resources:

limits:

cpu: 1

memory: 128Mi

requests:

cpu: 50m

memory: 32Mi

volumeMounts:

- name: machine-id

mountPath: /etc/machine-id

readOnly: true

- name: hostname

mountPath: /etc/hostname

readOnly: true

- name: journal

mountPath: /var/log/journal

readOnly: true

- name: var-log

mountPath: /rootfs/var/log/

volumes:

- name: machine-id

hostPath:

path: /etc/machine-id

- name: hostname

hostPath:

path: /etc/hostname

- name: var-log

hostPath:

path: /var/log

- name: journal

hostPath:

path: /var/log/journal

A few highlights:

line 23allows us to schedule this DaemonSet on masters as well.line 38tells journald the right ID, where to look for the journal logs.line 41helps us to know the hostname of the host Linux.

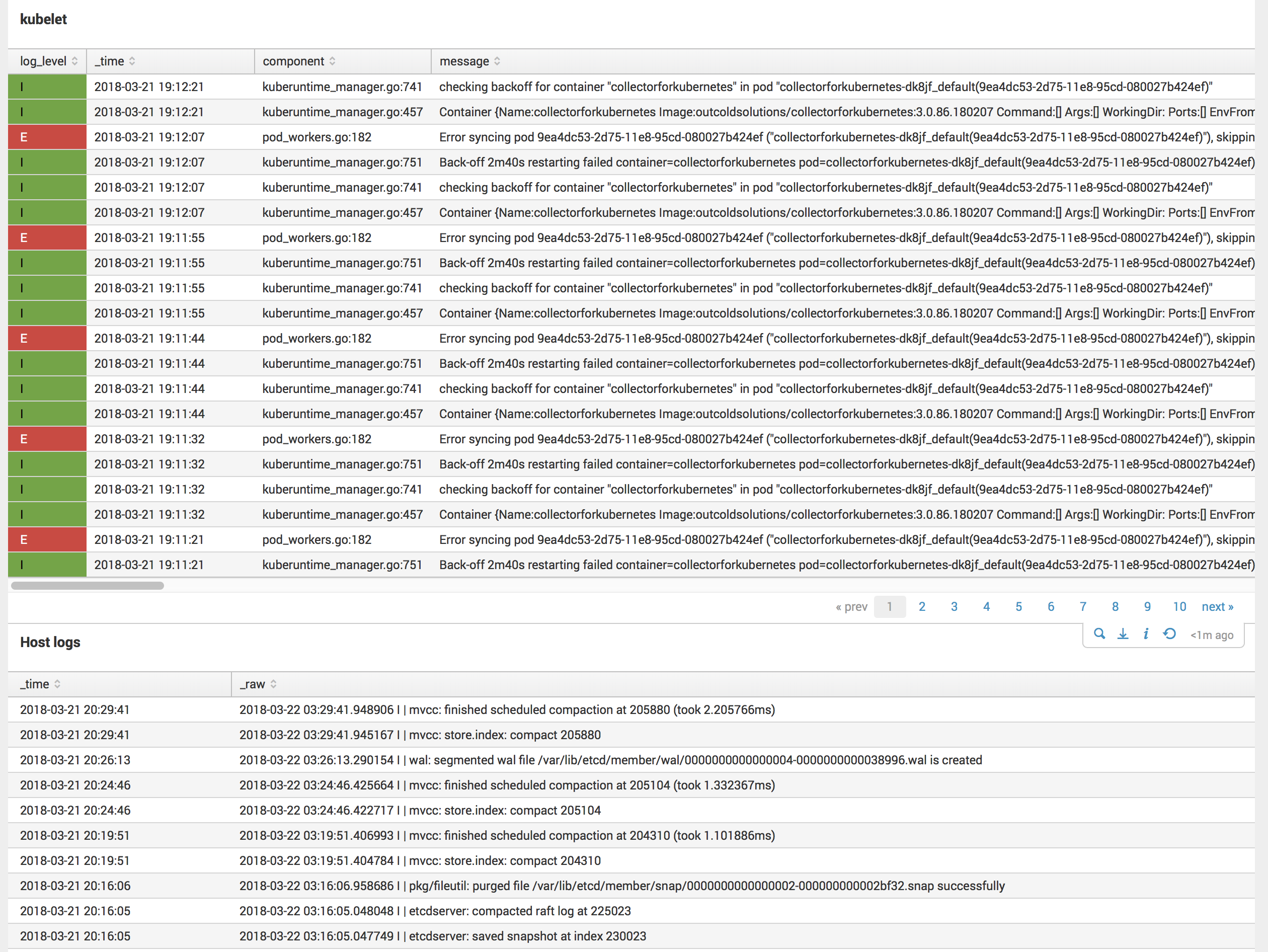

Splunk and Monitoring Kubernetes application

If you have not done that yet, you can install collectord and our application in Splunk by following our manual on How to get started with Kubernetes.

One small modification required for Monitoring Kubernetes v3.0 is to change macro_kubernetes_host_logs_kubelet

from syslog_component::kubelet to syslog_component::kubelet*, as in the case of Tectonic, the kubelet process is called

kubelet-wrapper.

You can do that in Settings → Advanced Search → Search marcos, modify macro_kubernetes_host_logs_kubelet to

(`macro_kubernetes_host_logs` AND (syslog_component::kubelet* OR source="*/kubelet.log*"))

After that, you should be able to see host logs, including kubelet logs