Forwarding pretty JSON logs to Splunk

March 11, 2018One the problem our collector solves for our customer - is support for multi-line messages. Including lines generated by rendering pretty JSON messages.

When you can avoid it, I suggest you to avoid. As an example, if you want to see pretty JSON messages for development, you can keep a configuration flag (can be an environment variable) that changes how messages are rendered. But if you are dealing with software, you did not write - read below.

As an example, I took sample JSON messages from http://json.org/example.html, and wrote simple python script to render them to stdout.

import json examples = [ '{ "glossary": { "title": "example glossary", "GlossDiv": { "title": "S", "GlossList": { "GlossEntry": { "ID": "SGML", "SortAs": "SGML", "GlossTerm": "Standard Generalized Markup Language", "Acronym": "SGML", "Abbrev": "ISO 8879:1986", "GlossDef": { "para": "A meta-markup language, used to create markup languages such as DocBook.", "GlossSeeAlso": ["GML", "XML"] }, "GlossSee": "markup" } } } }}', '{"menu": { "id": "file", "value": "File", "popup": { "menuitem": [ {"value": "New", "onclick": "CreateNewDoc()"}, {"value": "Open", "onclick": "OpenDoc()"}, {"value": "Close", "onclick": "CloseDoc()"} ] }}}', '{"widget": { "debug": "on", "window": { "title": "Sample Konfabulator Widget", "name": "main_window", "width": 500, "height": 500 }, "image": { "src": "Images/Sun.png", "name": "sun1", "hOffset": 250, "vOffset": 250, "alignment": "center" }, "text": { "data": "Click Here", "size": 36, "style": "bold", "name": "text1", "hOffset": 250, "vOffset": 100, "alignment": "center", "onMouseUp": "sun1.opacity = (sun1.opacity / 100) * 90;" }}}', '{"web-app": { "servlet": [ { "servlet-name": "cofaxCDS", "servlet-class": "org.cofax.cds.CDSServlet", "init-param": { "configGlossary:installationAt": "Philadelphia, PA", "configGlossary:adminEmail": "ksm@pobox.com", "configGlossary:poweredBy": "Cofax", "configGlossary:poweredByIcon": "/images/cofax.gif", "configGlossary:staticPath": "/content/static", "templateProcessorClass": "org.cofax.WysiwygTemplate", "templateLoaderClass": "org.cofax.FilesTemplateLoader", "templatePath": "templates", "templateOverridePath": "", "defaultListTemplate": "listTemplate.htm", "defaultFileTemplate": "articleTemplate.htm", "useJSP": false, "jspListTemplate": "listTemplate.jsp", "jspFileTemplate": "articleTemplate.jsp", "cachePackageTagsTrack": 200, "cachePackageTagsStore": 200, "cachePackageTagsRefresh": 60, "cacheTemplatesTrack": 100, "cacheTemplatesStore": 50, "cacheTemplatesRefresh": 15, "cachePagesTrack": 200, "cachePagesStore": 100, "cachePagesRefresh": 10, "cachePagesDirtyRead": 10, "searchEngineListTemplate": "forSearchEnginesList.htm", "searchEngineFileTemplate": "forSearchEngines.htm", "searchEngineRobotsDb": "WEB-INF/robots.db", "useDataStore": true, "dataStoreClass": "org.cofax.SqlDataStore", "redirectionClass": "org.cofax.SqlRedirection", "dataStoreName": "cofax", "dataStoreDriver": "com.microsoft.jdbc.sqlserver.SQLServerDriver", "dataStoreUrl": "jdbc:microsoft:sqlserver://LOCALHOST:1433;DatabaseName=goon", "dataStoreUser": "sa", "dataStorePassword": "dataStoreTestQuery", "dataStoreTestQuery": "SET NOCOUNT ON;select test=\'test\';", "dataStoreLogFile": "/usr/local/tomcat/logs/datastore.log", "dataStoreInitConns": 10, "dataStoreMaxConns": 100, "dataStoreConnUsageLimit": 100, "dataStoreLogLevel": "debug", "maxUrlLength": 500}}, { "servlet-name": "cofaxEmail", "servlet-class": "org.cofax.cds.EmailServlet", "init-param": { "mailHost": "mail1", "mailHostOverride": "mail2"}}, { "servlet-name": "cofaxAdmin", "servlet-class": "org.cofax.cds.AdminServlet"}, { "servlet-name": "fileServlet", "servlet-class": "org.cofax.cds.FileServlet"}, { "servlet-name": "cofaxTools", "servlet-class": "org.cofax.cms.CofaxToolsServlet", "init-param": { "templatePath": "toolstemplates/", "log": 1, "logLocation": "/usr/local/tomcat/logs/CofaxTools.log", "logMaxSize": "", "dataLog": 1, "dataLogLocation": "/usr/local/tomcat/logs/dataLog.log", "dataLogMaxSize": "", "removePageCache": "/content/admin/remove?cache=pages&id=", "removeTemplateCache": "/content/admin/remove?cache=templates&id=", "fileTransferFolder": "/usr/local/tomcat/webapps/content/fileTransferFolder", "lookInContext": 1, "adminGroupID": 4, "betaServer": true}}], "servlet-mapping": { "cofaxCDS": "/", "cofaxEmail": "/cofaxutil/aemail/*", "cofaxAdmin": "/admin/*", "fileServlet": "/static/*", "cofaxTools": "/tools/*"}, "taglib": { "taglib-uri": "cofax.tld", "taglib-location": "/WEB-INF/tlds/cofax.tld"}}}', '{"menu": { "header": "SVG Viewer", "items": [ {"id": "Open"}, {"id": "OpenNew", "label": "Open New"}, null, {"id": "ZoomIn", "label": "Zoom In"}, {"id": "ZoomOut", "label": "Zoom Out"}, {"id": "OriginalView", "label": "Original View"}, null, {"id": "Quality"}, {"id": "Pause"}, {"id": "Mute"}, null, {"id": "Find", "label": "Find..."}, {"id": "FindAgain", "label": "Find Again"}, {"id": "Copy"}, {"id": "CopyAgain", "label": "Copy Again"}, {"id": "CopySVG", "label": "Copy SVG"}, {"id": "ViewSVG", "label": "View SVG"}, {"id": "ViewSource", "label": "View Source"}, {"id": "SaveAs", "label": "Save As"}, null, {"id": "Help"}, {"id": "About", "label": "About Adobe CVG Viewer..."} ]}}' ] def main(): for idx, j in enumerate(examples): indent=None if idx % 2 == 1: indent = ' ' print(json.dumps(json.loads(j), indent=indent)) print() if __name__ == "__main__": main()

This script outputs every second JSON in a pretty format.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | {"glossary": {"title": "example glossary", "GlossDiv": {"title": "S", "GlossList": {"GlossEntry": {"ID": "SGML", "SortAs": "SGML", "GlossTerm": "Standard Generalized Markup Language", "Acronym": "SGML", "Abbrev": "ISO 8879:1986", "GlossDef": {"para": "A meta-markup language, used to create markup languages such as DocBook.", "GlossSeeAlso": ["GML", "XML"]}, "GlossSee": "markup"}}}}}

{

"menu": {

"id": "file",

"value": "File",

"popup": {

"menuitem": [

{

"value": "New",

"onclick": "CreateNewDoc()"

},

{

"value": "Open",

"onclick": "OpenDoc()"

},

{

"value": "Close",

"onclick": "CloseDoc()"

}

]

}

}

}

...

|

Examples below are based on the Monitoring Docker application, but solution can be applied to Kubernetes and OpenShift as well, you can find relevant documentation (including how to get started and how to configure collector) for Kubernetes and OpenShift under docs.

Let's see first what to expect when we run collector with default configuration from our manual. And run our script from above in docker.

docker run --name json_example --volume $PWD:/app -w /app python:3 python3 app.py

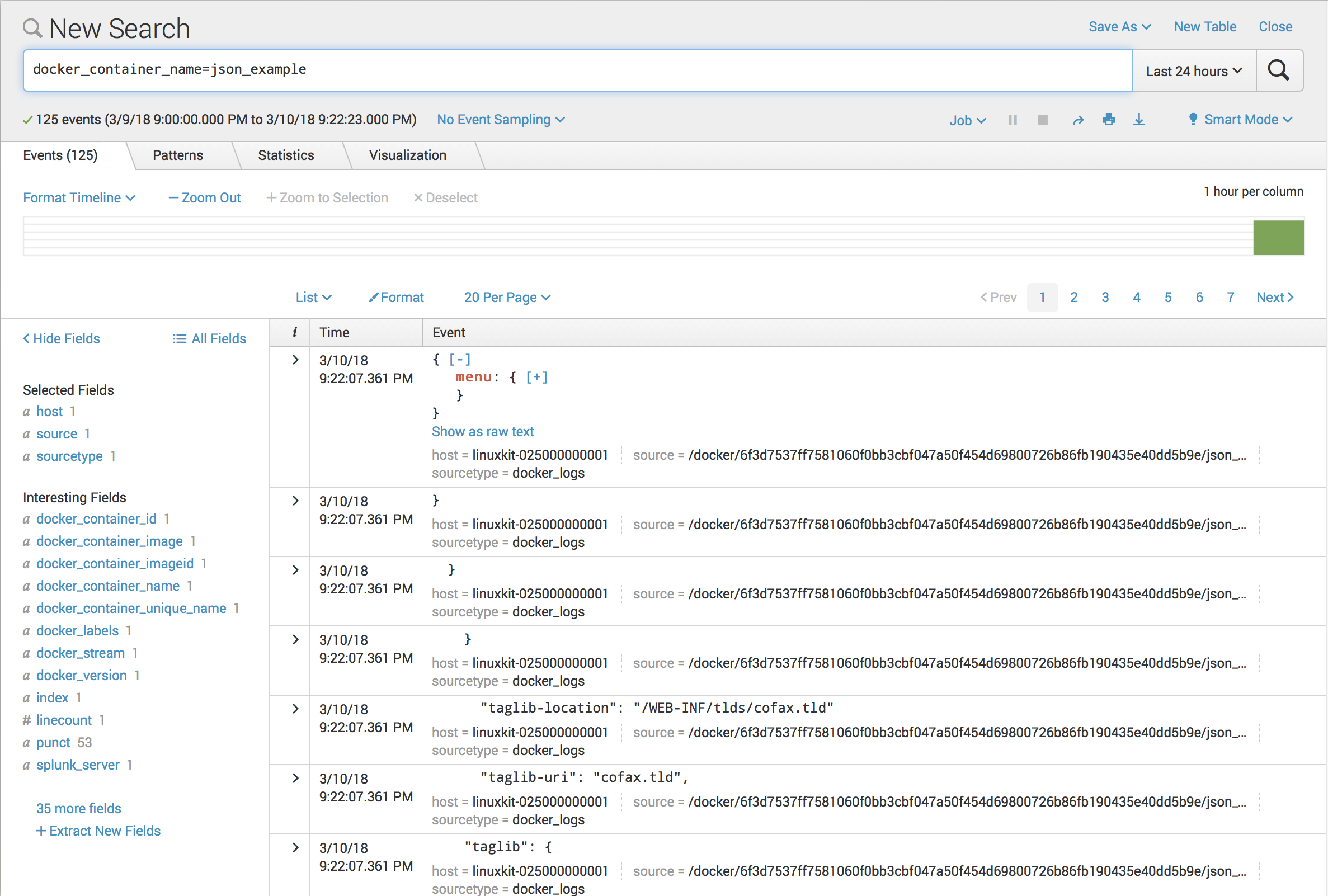

That does not result what you want in Splunk.

To parse JSON messages as one message you need to configure Join Rule and specify them in the configuration file.

[pipe.join::json] # Configure for which containers we want to apply this match rule. You can configure it for # containers running with a specific image as below or with matchRegex.docker_container_name = ^json_example$ matchRegex.docker_container_image = ^python:3$ # We want to define that all messages should start with `{` patternRegex = ^{

One way to configure it by using environment variables with a format like COLLECTOR__{ANY_NAME}={section}__{key}={value}.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | docker run -d \ --name collectorfordocker \ --volume /sys/fs/cgroup:/rootfs/sys/fs/cgroup:ro \ --volume /proc:/rootfs/proc:ro \ --volume /var/log:/rootfs/var/log:ro \ --volume /var/lib/docker/containers/:/var/lib/docker/containers/:ro \ --volume /var/run/docker.sock:/var/run/docker.sock:ro \ --volume collector_data:/data/ \ --cpus=1 \ --cpu-shares=102 \ --memory=256M \ --restart=always \ --env "COLLECTOR__SPLUNK_URL=output.splunk__url=https://hec.example.com:8088/services/collector/event/1.0" \ --env "COLLECTOR__SPLUNK_TOKEN=output.splunk__token=B5A79AAD-D822-46CC-80D1-819F80D7BFB0" \ --env "COLLECTOR__SPLUNK_INSECURE=output.splunk__insecure=true" \ --env "COLLECTOR__ACCEPTLICENSE=general__acceptLicense=true" \ --env "COLLECTOR__SPLUNK_JSON1=pipe.join::json__patternRegex=^{" \ --env "COLLECTOR__SPLUNK_JSON2=pipe.join::json__matchRegex.docker_container_image=^python:3$" \ --privileged \ outcoldsolutions/collectorfordocker:3.0.86.180207 |

Let's try to rerun our example.

docker run --name json_example --volume $PWD:/app -w /app python:3 python3 app.py

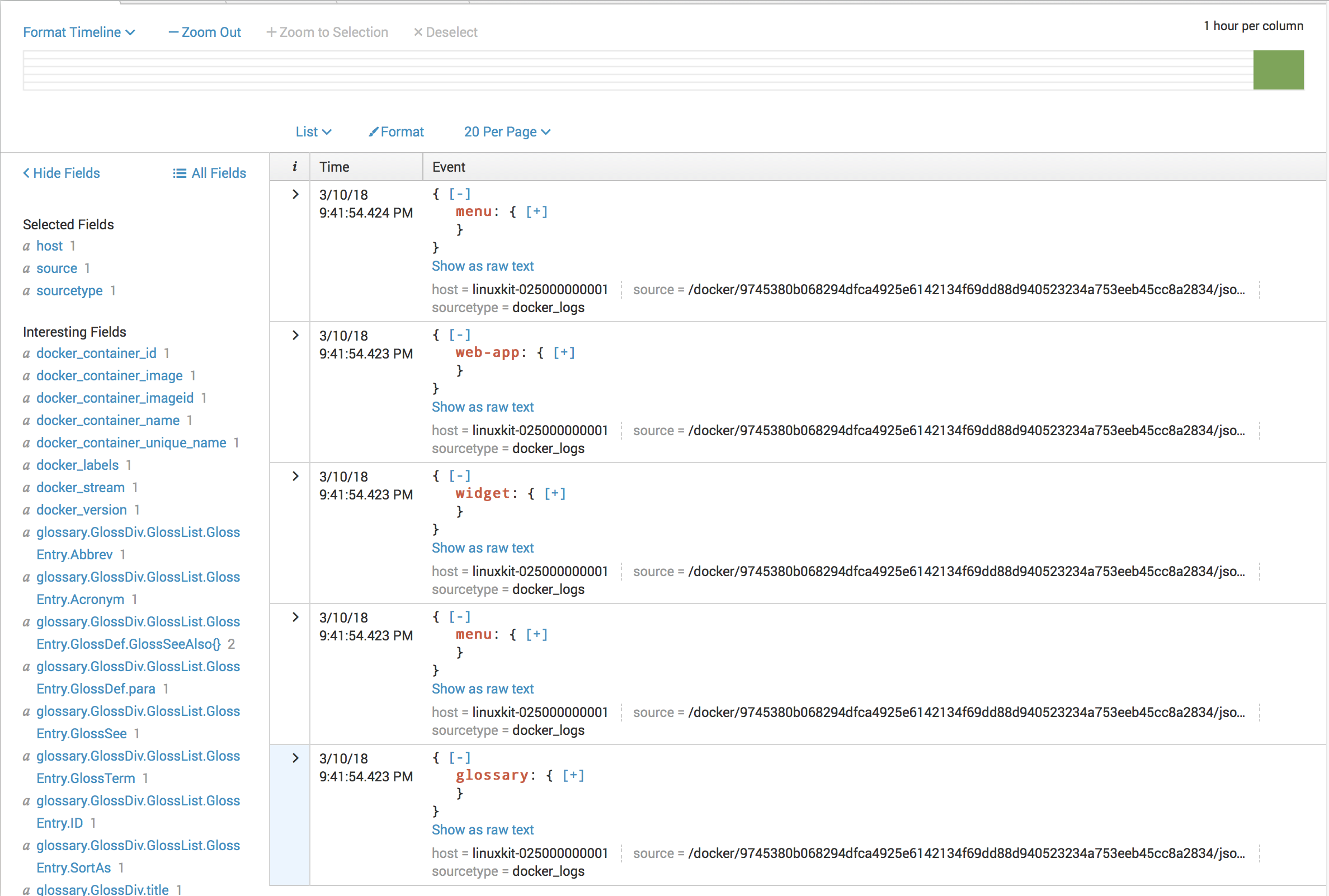

This time we see everything as expected in Splunk

If you have a mix of JSON output messages with other types of messages, you can also configure in the way where you expect

the line to start not from the } and not from any space characters.

[pipe.join::json] # Configure for which containers we want to apply this match rule. You can configure it for # containers running with specific image as below or with matchRegex.docker_container_name = ^json_example$ matchRegex.docker_container_image = ^python:3$ # We want to define that all messages should not start with `}` or any space characters. patternRegex = ^[^}\s]

Join rules is a powerful tool, read more about it in our documentation Join Rules.